Here’s a quick reminder before you get on with your day: Think twice before you upload your private medical data to an AI chatbot.

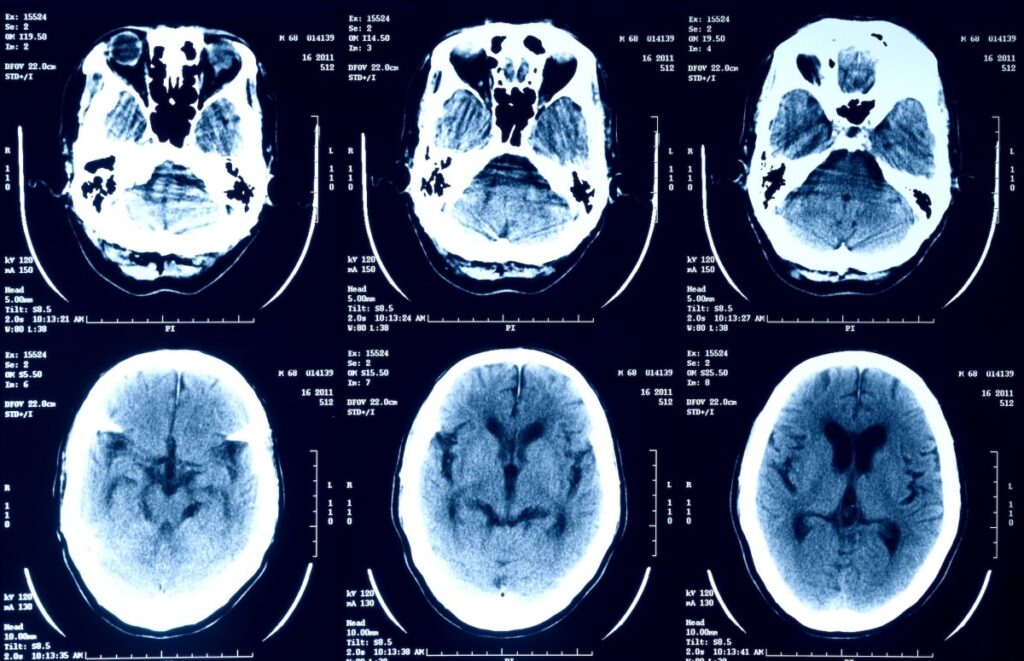

Folks are frequently turning to generative AI chatbots, like OpenAI’s ChatGPT and Google’s Gemini, to ask questions about their medical concerns and to better understand their health. Some have relied on questionable apps that use AI to decide if someone’s genitals are clear from disease, for example. And most recently, since October, users on social media site X have been encouraged to upload their X-rays, MRIs, and PET scans to the platform’s AI chatbot Grok to help interpret their results.

Medical data is a special category with federal protections that, for the most part, only you can choose to circumvent. But just because you can doesn’t mean you should. Security and privacy advocates have long warned that any uploaded sensitive data can then be used to train AI models, and risks exposing your private and sensitive information down the line.

Generative AI models are often trained on the data that they receive, under the premise that the uploaded data helps to build out the information and accuracy of the model’s outputs. But it’s not always clear how and for what purposes the uploaded data is being used, or whom the data is shared with — and companies can change their minds. You must trust the companies largely at their word.

People have found their own private medical records in AI training data sets — and that means anybody else can, including healthcare providers, potential future employers, or government agencies. And, most consumer apps aren’t covered under the U.S. healthcare privacy law HIPAA, offering no protections for your uploaded data.

X owner Elon Musk, who in a post encouraged users to upload their medical imagery to Grok, conceded that the results from Grok are “still early stage,” but that the AI model “will become extremely good.” By asking users to submit their medical imagery to Grok, the aim is that the AI model will improve over time and become capable of interpreting medical scans with consistent accuracy. As for who has access to this Grok data isn’t clear; as noted elsewhere, Grok’s privacy policy says that X shares some users’ personal information with an unspecified number of “related” companies.

It’s good to remember that what goes on the internet never leaves the internet.