A HN user asked me0 how I run LLMs locally with some specific questions, I’m documenting it here for everyone.

Before I begin I would like to credit the thousands or millions of unknown artists, coders and writers upon whose work the Large Language Models(LLMs) are trained, often without due credit or compensation.

Get Started

r/LocalLLaMA subreddit1 & Ollama blog2 are great places to get started with running LLMs locally.

Hardware

I have a laptop running Linux with core i9 (32threads) CPU, 4090 GPU (16GB VRAM) and 96 GB of RAM. Models which fit within the VRAM can generate more tokens/second, larger models will be offloaded to RAM (dGPU offloading) and thereby lower tokens/second. I will talk about models in a section below.

It’s not necessary to have such beefy computer for running LLMs locally, smaller models would run fine in older GPUs or in CPU albeit slowly and with more hallucinations.

There are a number of high quality open-source tools which enable running LLMs locally. These are the tools I use regularly.

Ollama3 is a middleware with python, JavaScript libraries for llama.cpp4 which helps run LLMs. I use Ollama in docker5.

Open WebUI6 is a frontend which offers familiar chat interface for text and image input and communicates with Ollama back-end and streams the output back to the user.

llamafile7 is a single executable file with LLM. It’s probably the easiest way to get started with local LLMs, but I’ve had issues with dGPU offloading in llamafile8.

I’m not a big consumer of image/video generation models, but when needed, I use AUTOMATIC11119 for images which require some customisation and Fooocus10 for simple image generation. For complex workflow automatons with image generation, there’s ComfyUI11.

For code completion I use Continue12 in VSCode.

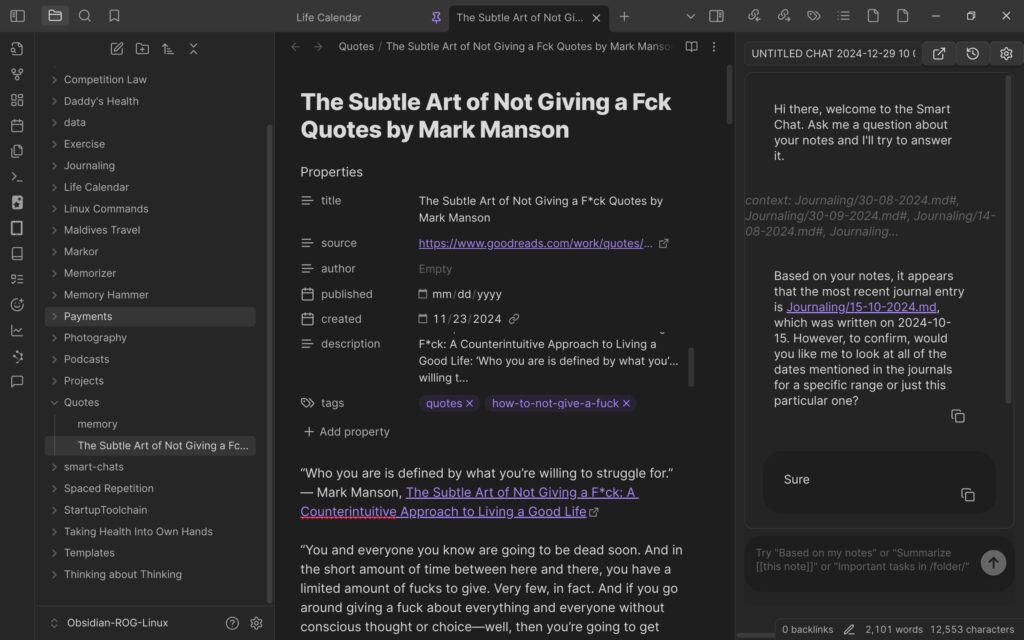

I use Smart Connections13 in Obsidian14 to query my notes using Ollama.

Models

I use Ollama models page15 to download latest LLMs. I use RSS on Thunderbird to keep track of the models. I use CivitAI16 to download image generation models for specific styles (e.g. Isometric for world building). But be advised that most models in CivitAI seem to be catered towards adult image generation.

I choose LLMs based on performance/ size. My current choice for LLMs changes frequently due to rapid advancement in LLMs.

• Llama3.2 for Smart Connections and generic queries.

• Deepseek-coder-v2 for code completion in Continue.

• Qwen2.5-coder for chatting about code in Continue.

• Stable Diffusion for image generation in AUTOMATIC1111 or Fooocus.

Updation

I update the docker containers using WatchTower17 and models from within the Open Web UI.

Fine-Tuning and Quantization

I haven’t fine-tuned or quantized any models on my machine yet as my Intel CPU may have a manufacturing defect18 so I don’t want to push it to high temperatures for long durations during training.

Conclusion

Running LLMs locally gives me total control over my data and lower latency for the responses. None of these would be possible without the open-source projects and open-source free models and original owners of the data upon which these models are trained.

I will update this post as and when I use newer tools/models.

[0] https://news.ycombinator.com/item?id=42537024

[1] https://www.reddit.com/r/LocalLLaMA/

[2] https://ollama.com/blog

[3] https://ollama.com/download

[4] https://github.com/ggerganov/llama.cpp

[5] https://hub.docker.com/r/ollama/ollama

[6] https://github.com/open-webui/open-webui

[7] https://github.com/Mozilla-Ocho/llamafile

[8] https://github.com/Mozilla-Ocho/llamafile/issues/611

[9] https://github.com/AUTOMATIC1111/stable-diffusion-webui

[10] https://github.com/lllyasviel/Fooocus

[11] https://github.com/comfyanonymous/ComfyUI

[12] https://docs.continue.dev/getting-started/overview

[13] https://github.com/brianpetro/obsidian-smart-connections

[14] https://obsidian.md

[15] https://ollama.com/search

[16] https://civitai.com/models/63376/isometric-chinese-style-architecture-lora

[17] https://containrrr.dev/watchtower/

[18] https://en.wikipedia.org/wiki/Raptor_Lake#Instability_and_degradation_issue

I strive to write low frequency, High quality content on Health, Product Development, Programming, Software Engineering, DIY, Security, Philosophy and other interests.

If you would like to receive them in your email inbox then please consider subscribing to my Newsletter.